Back to Case Studies

Back to Case Studies

Location

Washington, D.C.

“Garbage In, Garbage Out” is a 2019 report published by the Center on Privacy & Technology at Georgetown Law, one of several Center publications exploring law enforcement’s use of facial recognition technology. These reports aim to document the corrosive effects of facial recognition technology on privacy, civil rights, and civil liberties, including defendants’ due process rights. Additionally, the paper suggests substantive reforms to protect these rights against such incursions. The Center’s 2016 report “The Perpetual Lineup” uncovered the extent of police use of facial recognition. In contrast, “Garbage In, Garbage Out” specifically explores troubling uses of facial recognition technology in criminal investigations, such as locating suspects by feeding facial recognition programs heavily edited images, police sketches, or celebrity look-alike photos.

“Garbage In, Garbage Out” explores the troubling use of feeding facial recognition programs police sketches, celebrity look-alike photos, and other low-quality inputs in criminal investigations.

“Garbage In, Garbage Out” explores the troubling use of feeding facial recognition programs police sketches, celebrity look-alike photos, and other low-quality inputs in criminal investigations.

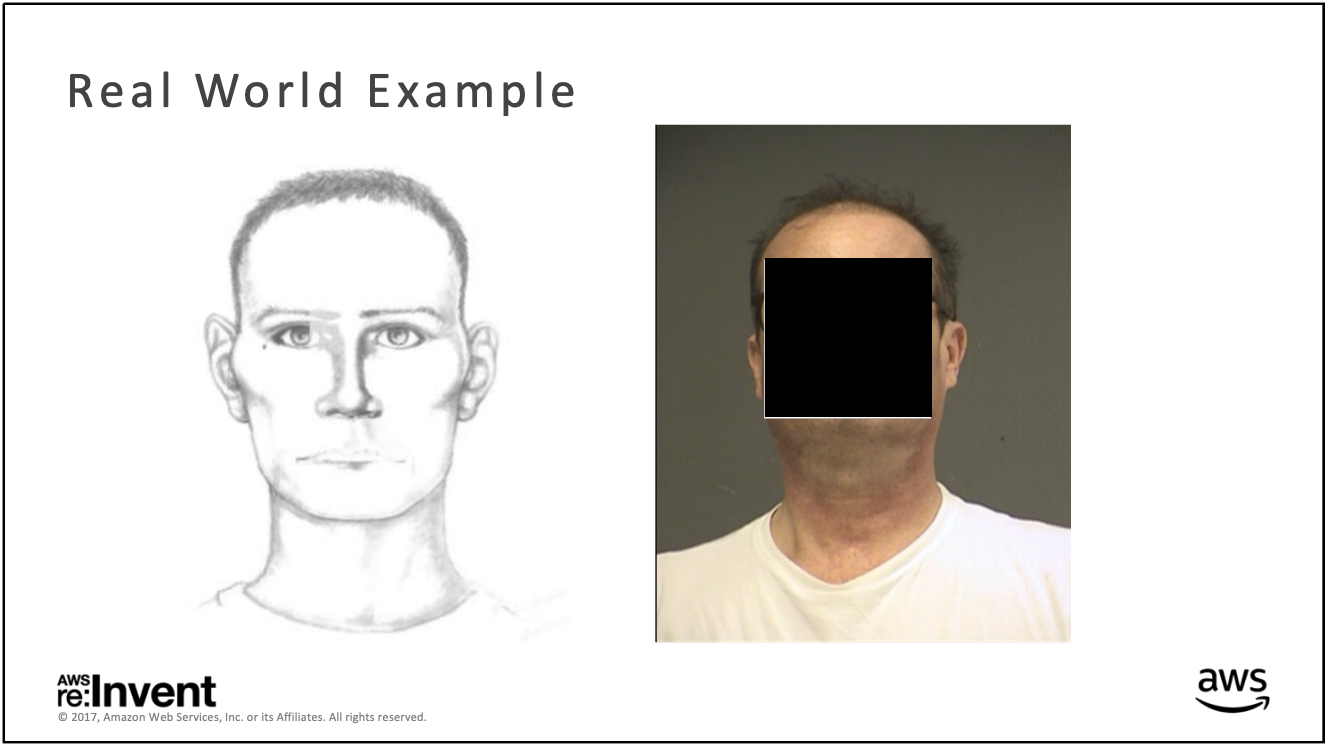

As described in “Garbage In, Garbage Out,” when attempting to locate a suspect, police officers can input “probe photos” of unknown individuals into face recognition algorithms for comparison against photographs in government databases. Absent any rules about which images may be used, police run face recognition searches on police sketches, low-quality stills from surveillance camera footage, social media posts, and even celebrity doppelgängers. If images are of poor quality or do not show the subject’s full face, officers may heavily edit the photos before running facial recognition, going so far as to mirror partial faces or replace open mouths with closed ones pulled from Google Images. Although most law enforcement agencies do not consider face recognition matches as positive identification sufficient to make an arrest, the lack of clear guidance on law enforcement’s use of face recognition technology means that police will sometimes make arrests almost solely on the basis of face recognition alone. Furthermore, when suspects are charged on this basis, they receive little or no information about the role face recognition played in their arrest.1 The widespread and growing use of face recognition technology by law enforcement absent strong guidelines on appropriate use has opened the way for the erosion of due process in the American criminal justice system.

The practice of using face recognition and artists’ sketches is highlighted by Amazon Web Services in a case study about the capabilities of its face recognition software, Rekognition.

The practice of using face recognition and artists’ sketches is highlighted by Amazon Web Services in a case study about the capabilities of its face recognition software, Rekognition.

“Garbage In, Garbage Out” leverages meticulous research to uncover the dangerously inconsistent and unregulated uses of face recognition by law enforcement. It is the first report of its kind, presenting the general public with a detailed and highly readable look at facial recognition in the criminal justice system. The report systematically documents multiple instances in which major police departments have utilized police sketches, computer edited images, and celebrity look-alike photos in conjunction with facial recognition databases in order to apprehend suspects.

Importantly, the report also shows that there are multiple instances where police officers have apprehended suspects solely or almost solely on the basis of a possible match from a facial recognition system - a practice which often flouts departmental and agency regulations.

As a result of this report, some police departments have changed their policies to restrict the use of heavily edited photos or forensic sketches in face recognition systems. “Garbage In, Garbage Out” has contributed greatly to legislative efforts focused on protections for due process in the age of facial recognition. The Utah State Legislature passed a law that enhances internal checks against misidentification in the face recognition process and requires prosecutorial disclosure, which may help alleviate endemic due process issues. A number of other states are considering similar legislation, or placing moratoria or bans on the use of face recognition outright. Armed with the findings of “Garbage In, Garbage Out,” the Center’s 2016 report “The Perpetual Lineup,” and trainings provided by the Center on Privacy & Technology, defense attorneys have begun challenging the use of face recognition in their clients’ criminal cases. The Center is also advocating for the institution of comprehensive federal legislation delineating limits on the use of facial recognition and guarantees to due process rights in the face of this new technology.

¹ Clare Garvie, “Garbage In. Garbage Out. Face Recognition on Flawed Data,” Garbage In. Garbage Out. Face Recognition on Flawed Data, May 16, 2019, https://www.flawedfacedata.com. ↩