Back to Case Studies

Back to Case Studies

Location

Cambridge, MA

Big data informs policymaking. But because big data is also highly sensitive, there are significant risks to individual privacy. SmartNoise aims to advance privacy-protective analyses by building a community around developing open-source software for differential privacy.

SmartNoise was established as a collaboration between Harvard’s Institute for Quantitative Social Science (IQSS) and Microsoft. SmartNoise’s platform ensures data is kept private while enabling researchers from academia, government, non-profits, and the private sector to gain new insights that can rapidly advance human knowledge. As an open-source initiative, researchers can use the platform to make their own data sets available to other researchers worldwide.

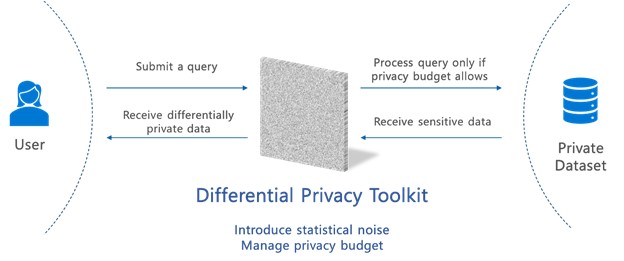

The toolkit injects noise into data to prevent disclosure of sensitive information and manage exposure risk.

The toolkit injects noise into data to prevent disclosure of sensitive information and manage exposure risk.

Insights from datasets can help solve complex problems in areas such as health, the environment, economic inequality, and more. Unfortunately, because many datasets contain sensitive information, legitimate concerns about compromising privacy currently prevent the use of informative data. By injecting random noise into statistics computed on the dataset, differential privacy techniques make it possible to extract valuable insights from datasets while safeguarding the privacy of individuals.

Unfortunately, the high complexity surrounding the use of differential privacy has made these tools cost-prohibitive for civil society and non-profit organizations to implement. As argued by Feldman et. al, when using differential privacy, “the trade-off between accuracy and privacy is not straightforward. Indeed, a major area of research in differential privacy is figuring out techniques that can improve that trade-off, making it possible to give stronger privacy guarantees at a given level of noise, or less noise at a given level of privacy.”1 Consequently, practical adoption of differential privacy remains slow despite increasing demand from government agencies and research communities.

The toolkit is designed to be a layer between queries and data systems to protect sensitive data.

The toolkit is designed to be a layer between queries and data systems to protect sensitive data.

SmartNoise created a trustworthy suite of differential privacy tools that serve as a public resource for any organization wanting to use differential privacy. SmartNoise focuses on supporting scientifically-oriented research and exploration in the public interest through enabling archival data repositories to take on sensitive data, allowing safe sharing of data from government agencies and companies with researchers, and increasing the robustness of research findings. SmartNoise seeks to make data more accessible and usable and empower researchers to simply and confidently deploy differential privacy tools.

The collaboration between industry and academia allows SmartNoise to create scalable frameworks and tools for companies to share data with university researchers in a secure and privacy-preserving way.

SmartNoise implemented its tools in both businesses and government agencies. Microsoft has deployed differential privacy tools in technologies such as Windows and Workplace Analytics. Companies, including Facebook, have also been more willing to make datasets available to researchers through the OpenDP platform.

SmartNoise has been used to study student outcomes by granting researchers access to education data from California and Texas. Broadband usage data has also been unlocked to help the Federal Communications Commission and policymakers expand internet access to under-served communities and bridge the digital divide.

To alleviate the cost of adoption, SmartNoise launched an early adopter acceleration program aimed to help civil society and non-profit organizations leverage differential privacy tools without the need to build their own code or platform. SmartNoise experts will provide technical assistance to incorporate differential privacy into the data-sharing processes of projects where unlocking data or insights will benefit society.

¹ Vitaly Feldman et al., “Differential Privacy: Issues for Policymakers,” June 29, 2020, https://simons.berkeley.edu/news/differential-privacy-issues-policymakers ↩