Back to Case Studies

Back to Case Studies

Locations

Bengaluru, India

Cambridge, MA

New Delhi, India

Yorktown Heights, NY

Today, AI systems are increasingly used by governments and businesses to make decisions that impact the life of millions. These systems are only as fair and just as the data put into them, therefore it is critical for practitioners to develop and train systems with minimal unwanted bias and to develop algorithms that can be reasonably explained.

The AI Fairness 360 Toolkit was developed by IBM Research to check for, understand, and mitigate unwanted bias in datasets and machine learning models. It was designed with extensibility in mind and encourages open-source contribution of metrics, explainers, and bias mitigation algorithms from members of the public. Along with its companion Adversarial Robustness 360 Toolbox, AI Explainability 360 Toolkit, and AI Factsheets effort, the goal of this initiative is to engender trust in AI and make the world more equitable for all.

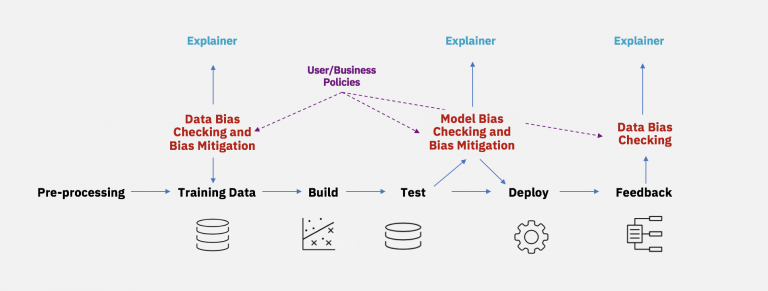

The AIF360 toolkit allows industry practitioners to mitigate bias throughout the AI lifecycle.

The AIF360 toolkit allows industry practitioners to mitigate bias throughout the AI lifecycle.

Machine learning models are ubiquitously driving high-stakes political, social, and economic decisions such as credit, employment, school admissions, and parole. They have potential to cause objectionable discrimination when a decision places certain privileged groups at systematic advantage and certain unprivileged groups at systematic disadvantage. Bias can enter an AI system due to technical and design decisions, through the training data set, or due to pre-existing cultural, social, or institutional context in which the AI is deployed. Therefore, to create a future in which AI-automated and AI-induced bias, injustice, and inequity is minimized, we need resources to help us identify and mitigate bias.

The AI Fairness 360 Python package is a comprehensive set of metrics and algorithms to test for and mitigate biases. Overall, AIF360 contains 70+ fairness metrics and 11 state-of-the-art bias mitigation algorithms developed by IBM and the wider research community. The toolkit also includes interactive demonstrations which introduce key machine learning concepts and capabilities as well as tutorials and other notebooks to offer deeper, data scientist-oriented introductions.

AIF360 is different from currently available open source efforts due its focus on bias mitigation, as opposed to simply focusing on metrics. It is relevant to all industries ranging from healthcare to government to financial services and offers an API for developers to access.

The toolkit has been designed to be used by a data scientist or developer, when they are tasked to create an AI model, to ensure that no bias is present during any phase of model development. Specifically, the toolkit can be used during three different phases of the data science lifecycle. It can be used to analyze and mitigate biases in the training data, then it can be used to analyze and mitigate biases in the algorithms that create the machine learning model and finally, it can be used to analyze and mitigate predictions that are made by the model during time of deployment.1

Based on the three areas of intervention, AIF360’s three algorithm categories are respectively known as pre-processing, in-processing, and post-processing algorithms. Users can decide which category to use based on their ability to intervene at different parts of a machine learning pipeline. AIF360 recommends targeting the earliest mediation category in the pipeline that a user has permission and ability to correct because it enables greatest opportunity to correct upstream bias in an AI system.2

Since its launch, AIF360 has risen to be the top package on Github related to AI fairness. Its widespread adoption can be attributed to a global, comprehensive outreach and scaling strategy. AIF360 has an online Slack community, GitHub community, and has been presented at a series of international conferences.

Due to the success of AIF360, IBM has released three similar developer toolkits: AI Explainability 360 (AIX360), Adversarial Robustness 360 Toolbox (ART), and AI Factsheets.

Future plans include the continued development and scaling of similar solutions in collaboration with peers in industry, academia, government, and civil society, such as those who participate in the Partnership on AI’s ABOUT ML initiative.