Back to Case Studies

Back to Case Studies

Locations

Palo Alto, CA

Redwood City, CA

The Ethical Operating System can help makers of tech, product managers, engineers, and others get out in front of problems before they happen. It’s been designed to facilitate better product development, faster deployment, and more impactful innovation. All while striving to minimize technical and reputational risks. This toolkit can help inform your design process today and manage risks around existing technologies in the future. EthicalOS is freely shareable with a creative commons license.

The ten-year runway is the amount of time we typically see massive disruption of technological ecosystems and social norms.

It took Facebook 10 years to go from one user to one billion users; 10 years for Bitcoin to go from being a hypothetical idea discussed in a scientific article to having a $151 billion market capitalization; 10 years for Uber to go from its first driver to its IPO amid global disruption of work norms and transportation; and 10 years for the launch of the Civil Rights movement for racial justice in the United States to go from its first march to passage of national Civil Rights legislation. The goal of Ethical OS goal is to start assessing, publicly and collaboratively, new technologies and technology-related policies at the beginning of these ten-year runways, rather than at the end, as is often the case now, when a technology or movement has already been fully scaled and achieved “liftoff.”

The EthicalOS aims to shift the technology industry’s focus to carefully considered risk avoidance rather than frantically applied risk mitigation.

The EthicalOS aims to shift the technology industry’s focus to carefully considered risk avoidance rather than frantically applied risk mitigation.

Tech companies are under extraordinary pressure to become better ethical actors. Nobody wants to fi nd out what the next version of “fake news” or “propaganda bots” or “smartphone addiction” will be. We’ve had enough unpleasant surprises. The general public, government regulators, investors and people who work in tech companies all want to feel more hopeful that we can identify and prevent big risks to truth, democracy, privacy, security and mental health before they catch us collectively off guard again.

The challenge, however, is that making ethical decisions about the technology you build or decide to use means you have to essentially be able to predict the future. How do you know what the right, more ethical action is? You have to anticipate the long-term consequences of your choices. Otherwise, you’re guessing blindly. Is this good for humanity? Is this good for society? You can’t answer those questions if you’re not thinking like a futurist.

The EthicalOS is an “early warning system” for long-term ethical risks and unintentional social harms of emerging technologies

The first tool, the 14 Risky Future scenarios are a way to help crack open and expand people’s imaginations. They give specific examples of technologies that are already becoming more advanced and more widespread. Most creators in tech, from start-up founders to investors to engineers, tend to focus on the problems they’ve been trying to solve, rather than new problems they might accidentally create. It is important to correct for this bias with scenarios that push people to use not just their positive imagination, but their shadow imagination as well. Looking at a range of scenarios is a good way to stretch that imagination.

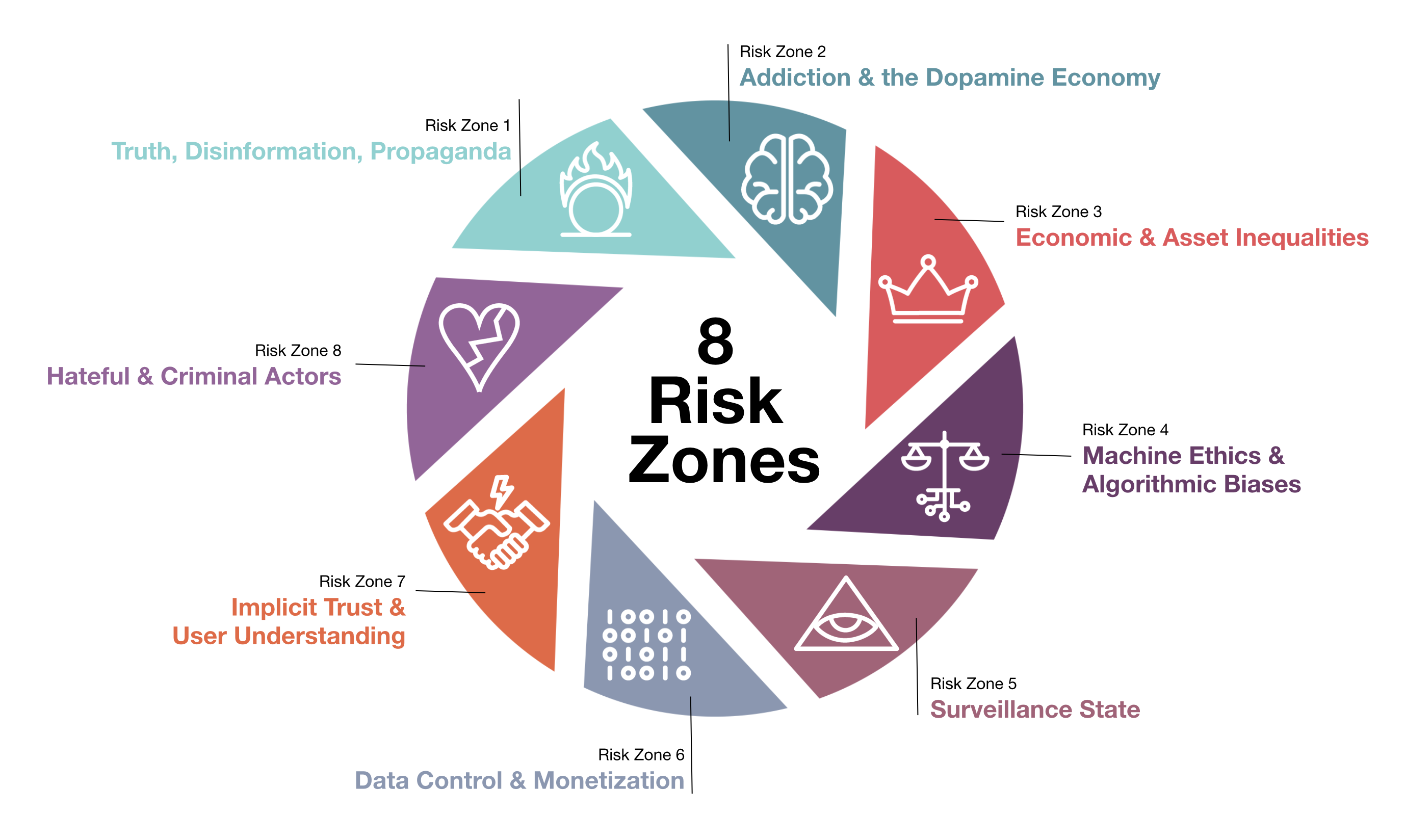

The second tool, the Risk Mitigation checklist, invites tech companies to scan a series of 21 questions designed to uncover potential risks of social harms across 8 risk zones, from Truth and Propaganda, to Algorithmic Biases, Addiction, and Hateful and Criminal Actors. The checklist encourages companies to consider all 21 questions, even if they don’t initially seem relevant. The tool should help you uncover surprising risks. For example, if your company is a drone tech company, you are probably already thinking about risks in the areas of privacy and weaponization. But what about long-term impacts of living in a society where drones are ubiquitous? Are you specifically concerned about state surveillance and potential authoritarian uses? If not, please spend time with Risk Zone 5: Surveillance State.

The third tool, Future-Proofing Strategies, is about figuring out how tech companies, investors, governments, and the public can work together to make a better tech future. For example, one strategy introduces the idea of Ethical Bounty Hunters: What if tech companies paid “bounties” to outside individuals to identify the potential social risks of new services and technologies? Hackers are currently rewarded for alerting companies to security flaws. Ethical bounties could scrutinize different areas like “mental health impact,” “risks to democracy,” and “structural racism, sexism, or other inequality.” There could be an annual ethical hacking convention on the scale of the enormously influential hacker convention Def Con.

Omidyar Network and Juggernaut (a movement building agency) are responding to the recognized need for an accessible toolkit that gives tech workers—from product managers and engineers to designers and founders—a clear, accessible resource to steward responsible tech by sparking dialogue and tackling ethical risk zones. The Ethical Explorer Pack will be launching later this year at ethicalexplorer.org. Juggernaut will be publishing a LinkedIn Learning Course for makers and consumers of technology this spring and holds advisory workshops for venture portfolio companies on a regular basis - having now trained over 200 companies around the world in how to apply the checklist to their products.

At the Institute for Future, the team has recognized a need for EthicalOS to be further adapted and developed for the civic sector, to help elected officials and policy makers get ahead of the next wave of risks, recently completing trainings for U.S mayors, the California state legislature and more. IFTF has already launched public training for the EthicalOS on the online learning platform Coursera, making the toolkit a centerpiece of the five-course series on Futures Thinking with more than 10,000 individuals trained so far worldwide.