Back to Case Studies

Back to Case Studies

Location

Cambridge, MA

Gender Shades is a research project that evaluates the accuracy of AI powered gender classification products. The project’s work has demonstrated that the facial recognition software of IBM, Microsoft, and Face++ systematically fails to identify some of our most vulnerable communities. Gender Shades worked with these companies to build representative databases and with lawmakers to craft protective policies.

117M Americans are in facial image databases used by law enforcement

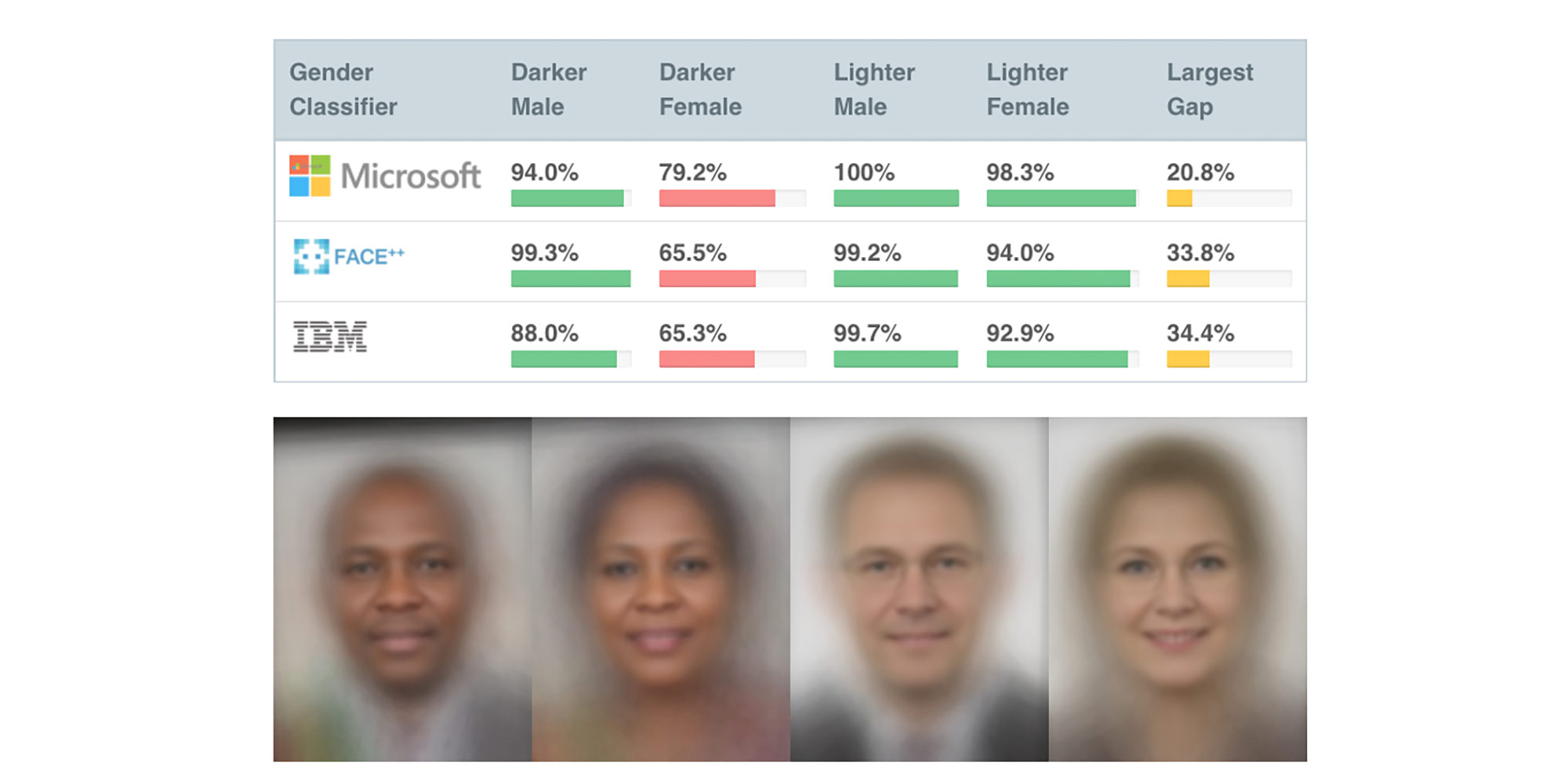

Over the course of their research, the project found that 35% of facial recognition errors happen when identifying dark skinned women, compared to 1% for lighter skinned males. Facial recognition software is expected to grow 72% in hiring and hotels in the next 5 years.

Gender Shades indicates that 3 large tech companies showed large gaps in identifying darker skinned women.

Gender Shades indicates that 3 large tech companies showed large gaps in identifying darker skinned women.

Machine learning algorithms now determine who is fired, hired, promoted, granted a loan or insurance, and even how long someone spends in prison.12 However, these same algorithms can discriminate based on classes like gender and race. As the use of facial recognition software continues to grow, biased algorithms can increasingly harm people’s lives. A yearlong research investigation across 100 police departments revealed that African-American individuals are more likely to be stopped by law enforcement and be subjected to face recognition searches than individuals of other ethnicities.3 Gender Shades shows that the currently available facial recognition softwares are more likely to render false positives for darker skinned individuals and for women.

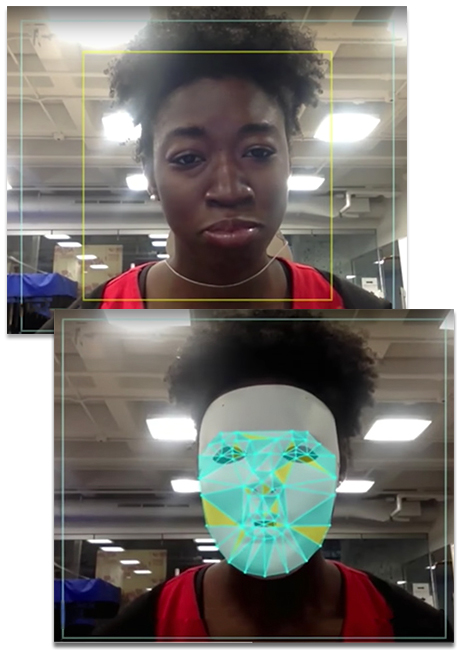

Founder Joy Buolamwini tests various facial recognition systems on herself - the software only detect her when she puts on a white mask.

Founder Joy Buolamwini tests various facial recognition systems on herself - the software only detect her when she puts on a white mask.

Gender Shades has demonstrated that the facial recognition software of IBM, Microsoft, and Face++ systematically fails to correctly classify some of our most vulnerable communities. Gender Shades advocated that they change their design, development, and deployment practices and encouraged them to sign the Safe Face Pledge. Gender Shades also worked with lawmakers to craft protective policies. After analyzing results on 1270 uniques faces, the Gender Shades authors uncovered severe gender and skin-type bias in gender classification.Gender and skin type skews in existing datasets led to the creation of a new Pilot Parliaments Benchmark, composed of parliamentarians from the top three African and top three European countries, as ranked by gender parity in their parliaments as of May 2017. The authors found that facial recognition software mischaracterizes darker skinned women by a gap of nearly 34% as compared to lighter skinned men.

Gender Shades was successful in highlighting the disparities in facial recognition software and urging change in the industry. After Gender Shades pointed out Microsoft’s 21% worse error rate for women with darker skin, the company recreated its training data and reported a 9x improvement in accuracy for darker skinned women and 20x for darker skinned men.4 Moreover, the company has worked with public sector officials to improve the facial recognition industry as well. Gender Shades helped pass the law in San Francisco that banned facial recognition software and partnered with the Congressional Black Caucus to issue a letter to Amazon to improve its Rekognition systems.

The research has also been used as a support tool for legislative and political change. Gender Shades was instrumental in providing quantitative support for two pieces of legislation (the Algorithmic Accountability Act and the No Biometric Barriers Act). Lastly, Senator Kamala Harris has referred to the work in her requests to the FBI to investigate facial recognition software.5

On an individual level, the founders have continued to push this work forward through multiple private and public sector channels. Both authors of the paper were selected as part of The Bloomberg 50 in 2018. The 2020 Sundance Film Festival will feature a documentary film that highlights their work.

¹ Cathy O’Neil, Weapons of Math Destruction (Crown Publishing, September 2016) ↩

² Virginia Eubanks, Automating Inequality (St. Martin’s Press, January 2018) ↩

³ Clare Garvie, Alvaro Bedoya, and Jonathan Frankle. The Perpetual Line-Up: Unregulated Police Face Recognition in America (Georgetown Law: Center on Privacy & Technology, October 2016) ↩

⁴ Kyle Wiggers, IBM releases Diversity in Faces, a dataset of over 1 million annotations to help reduce facial recognition bias (Venture Beat, January 2019) ↩

⁵ Senator Kamala Harris, Letter to Director Chris Way (September 2019, accessible at: https://www.scribd.com/embeds/388920671/content#from_embed) ↩